Cassandra SSL for vCloud Director metrics database

In vCloud Director v9 the requirement for both Apache Cassandra and KairosDB for storing metrics has been reduced to just Apache Cassandra. In addition, the ability to view VM metrics directly in the new vCD 9 HTML5 tenant UI makes it much more important to have a reliable Cassandra infrastructure.

As I found when researching this post, configuring SSL in Cassandra is a bit of a pain, since Cassandra runs as a Java application it has some issues with the types of CA certificates and trusts it can use, which is further complicated by the options of both node to node encryption as well as cluster to clients.

I set out to produce an 'easy' way to configure a Cassandra cluster with SSL for both node to node and node to client communication in a way which could be reasonably easily implemented and reproduced for future installs.

My test environment consists of the minimum supported configuration of 4 Cassandra nodes (of which 2 are 'seed' nodes) running on CentOS Linux 7 (since that's what we tend to use most for our backend infrastructure services). This configuration can almost certainly be adapted for other Linux distributions and I've tried to document the certificate generation process sufficiently that this will be straightforward.

Inspiration for this post was from Antoni Spiteri's blog and script to configure a Cassandra cluster for vCloud Director metrics which I found extremely useful background.

Initial setup of my node servers used the following pattern to install and configure Cassandra:

Ensure all appropriate updates have been applied to our new CentOS installation if appropriate:

# yum update -y

Install Java (currently on my systems this installs java-1.8.0-openjdk.x86_64 1:1.8.0.161-b14.el7_4 which appears to work fine):

# yum install -y java

Create a file /etc/yum.repos.d/cassandra.repo (with vi or your favourite text editor) to include the Cassandra 3.0.x repository with the following contents. Note that I'm using the Cassandra 3.0.x repository (30X) and not the latest 311x release repository as this is not yet supported by VMware for vCloud Director:

1[cassandra]

2name=Apache Cassandra

3baseurl=https://www.apache.org/dist/cassandra/redhat/30x/

4gpgcheck=1

5repo_gpgcheck=1

6gpgkey=https://www.apache.org/dist/cassandra/KEYS

Install Cassandra software itself (currently on my test nodes this pulls in the cassandra.noarch 0:3.0.15-1 package):

# yum install -y cassandra

The main configuration file for Cassandra is (on CentOS installed from this repository) in /etc/cassandra/conf/cassandra.yaml.

At least the following options in this file will need to be changed before we can get a cluster up and running:

|Original cassandra.yaml|Original cassandra.yaml|Edited cassandra.yaml|Notes| |cluster_name: 'Test Cluster'|'My vCD Cluster'|Doesn't absolutely have to be changed, but you probably should do. Note that this setting must exactly match on each of your node servers or they won't be able to join the cluster.| |authenticator: AllowAllAuthenticator|authenticator: PasswordAuthenticator|We'll want to use password security from vCloud Director to the cluster.| |authorizer: AllowAllAuthorizer|authorizor: CassandraAuthorizer|Required to enforce password security.| |- seeds: "127.0.0.1"|- seeds: "Seed Node 1 IP address,Seed Node 2 IP address"|Set the seeds for the cluster (minimum 2 nodes must be configured as seeds).| |listen_address: localhost|listen_address: This node IP address|Must be configured for the node to listen for non-local traffic (from other nodes and clients).| |rpc_address: localhost|rpc_address: This node IP address|Must be configured for the node to listen for non-local traffic (from other nodes and clients).|

We'll need to change some additional settings later to implement SSL security, but these settings should be enough to get the cluster functioning.

You'll also need to permit the Cassandra traffic through the default CentOS 7 firewall, the following commands will open the appropriate ports (as root):

1firewall-cmd --zone public --add-port 7000/tcp --add-port 7001/tcp --add-port 7199/tcp --add-port 9042/tcp --add-port 9160/tcp --add-port 9142/tcp --permanent

2firewall-cmd --reload

Once you've performed these steps on each of the 4 nodes, you should be able to bring up a (non-encrypted) cassandra cluster by running:

service cassandra start

on each node, you should probably also enable the service to auto-start on server reboot:

chkconfig cassandra on

Note that you should start the nodes 1 by 1 and allow a minimum of 30 seconds between each one to allow the cluster to reconfigure as each node is added before adding the next one, check /var/log/cassandra/cassandra.log and /var/log/cassandra/system.log if you have issues with the cluster forming.

My test cluster has 4 nodes (named node01, node02, node03 and node04 imaginatively enough), and node01 and node02 are the seeds. The IP addresses are 10.0.0.101,102,103 and 104 (/24 netmask).

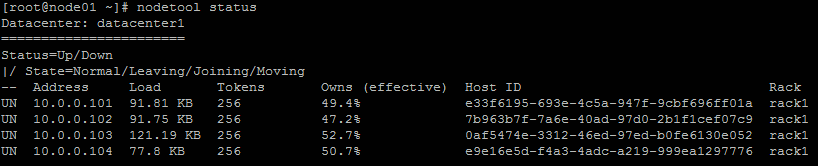

If everything has worked ok running 'nodetool status' on any node should show the cluster members all in a state of 'UN' (Up/Normal):

You should also be able to login to the cluster via any of the nodes using the cqlsh command (the default password is 'cassandra' for the builtin cassandra user)

1[root@node01 ~]# cqlsh 10.0.0.101 -u cassandra -p cassandra

2Connected to My vCD Cluster at 10.0.0.101:9042.

3[cqlsh 5.0.1 | Cassandra 3.0.15 | CQL spec 3.4.0 | Native protocol v4]

4Use HELP for help.

5cassandra@cqlsh>

To reconfigure the cluster for SSL encrypted communication we need to complete a number of tasks:

- Create a new CA certificate authority (we could use an existing / external CA authority, but I'm trying to keep this simple).

- Export the public key for our new CA so we can tell vCloud Director later to trust certificates it has issued.

- Create a new truststore for Cassandra so Cassandra trusts our CA.

- Create a new keystore for Cassandra for each node which includes the public key of our CA.

- Create a certificate request from each node and submit this to our CA for signing.

- Import the certificate response from the CA into the keystore for each node.

- Move the generated truststore and keystore into appropriate locations and configure security on the files.

- Reconfigure Cassandra to enable encryption and use our certificates.

That's a lot of steps to go through and extremely tedious to get right, so I wrote a script to do most of these steps, create a file (I called my 'gencasscerts.sh' on one of the node servers and copy/paste the script contents from below):

1#!/bin/bash

2

3NODENAMES=(node01 node02 node03 node04)

4

5STORETYPE="PKCS12"

6CASTOREPASS="capassword"

7NODESTOREPASS="nodepassword"

8CLUSTERCN="cassandra"

9CERTOPTS="OU=MyDept,O=MyOrg,L=MyCity,S=MyState,C=US"

10

11# Generate our CA certificate

12/usr/bin/keytool -genkey -keyalg RSA -keysize 2048 -alias myca \

13-keystore myca.p12 -storepass ${CASTOREPASS} -storetype ${STORETYPE} \

14-validity 3650 -dname "CN=${CLUSTERCN},${CERTOPTS}"

15

16# Export the public key of our new CA to myca.pem:

17/usr/bin/openssl pkcs12 -in myca.p12 -nokeys -out myca.pem -passin pass:${CASTOREPASS}

18

19# Export the private key of our new CA to myca.key:

20/usr/bin/openssl pkcs12 -in myca.p12 -nodes -nocerts -out myca.key -passin pass:${CASTOREPASS}

21

22# Create a truststore that only includes the public key of our CA

23/usr/bin/keytool -importcert -keystore .truststore -storepass ${CASTOREPASS} -storetype ${STORETYPE} -file myca.pem -noprompt

24

25((n_elements=${#NODENAMES[@]}, max_index=n_elements-1))

26for ((i = 0; i <= max_index; i++)); do

27curnode=${NODENAMES[i]}

28echo "Processing node $i: ${curnode}"

29

30# Generate node certificate:

31/usr/bin/keytool -genkey \

32-keystore ${curnode}.p12 -storepass ${NODESTOREPASS} -storetype ${STORETYPE} \

33-keyalg RSA -keysize 2048 -validity 3650 \

34-alias ${curnode} -dname "CN=${curnode},${CERTOPTS}"

35

36# Import the root CA public cert to our node cert:

37/usr/bin/keytool -import -trustcacerts -noprompt -alias myca \

38-keystore ${curnode}.p12 -storepass ${NODESTOREPASS} -file myca.pem

39

40# Generate node CSR:

41/usr/bin/keytool -certreq -alias ${curnode} -keyalg RSA -keysize 2048 \

42-keystore ${curnode}.p12 -storepass ${NODESTOREPASS} -storetype ${STORETYPE} \

43-file ${curnode}.csr

44

45# Sign the node CSR from our CA:

46/usr/bin/openssl x509 -req -CA myca.pem -CAkey myca.key -in ${curnode}.csr -out ${curnode}.crt -days 3650 -CAcreateserial

47

48# Import the signed certificate into our node keystore:

49/usr/bin/keytool -import -keystore ${curnode}.p12 -storepass ${NODESTOREPASS} -storetype ${STORETYPE} \

50-file ${curnode}.crt -alias ${curnode}

51

52mkdir ${curnode}

53cp .truststore ${curnode}/.truststore

54cp ${curnode}.p12 ${curnode}/.keystore

55cp ${curnode}.crt ${curnode}/client.pem

56

57done

Update 2018/01/26: I realised shortly after publishing that the original version of this script included both the public and private keys for the CA in the .truststore keystore. While this isn't a huge issue in a private environment it's definitely not 'best practice' to distribute the private key of the CA to each node so I've refined the script and removed the private keys from the generated .truststore files in this version. If you need to regenerate your environment keys remember that if you re-run the script both the .truststore and .keystore files will need to be updated on each node.

Make the script executable using:

chmod u+x gencasscerts.sh

Edit the settings and passwords for the certificates (we'll need these later) in the variables at the top of the file to be appropriate for your environment (in particular the node names in the 3rd line) and any other options you want to change. The default validity period of the generated certificates is set to 10 years (3650 days). Obviously you should also change the passwords in your copy of the script for the CASTOREPASS and NODESTOREPASS variables.

When you run the script you should get output similar to the following:

1[root@node01 ~]# ./gencasscerts.sh

2MAC verified OK

3MAC verified OK

4Processing node 0: node01

5Certificate was added to keystore

6Signature ok

7subject=/C=US/ST=MyState/L=MyCity/O=MyOrg/OU=MyDept/CN=node01

8Getting CA Private Key

9Certificate reply was installed in keystore

10Processing node 1: node02

11Certificate was added to keystore

12Signature ok

13subject=/C=US/ST=MyState/L=MyCity/O=MyOrg/OU=MyDept/CN=node02

14Getting CA Private Key

15Certificate reply was installed in keystore

16Processing node 2: node03

17Certificate was added to keystore

18Signature ok

19subject=/C=US/ST=MyState/L=MyCity/O=MyOrg/OU=MyDept/CN=node03

20Getting CA Private Key

21Certificate reply was installed in keystore

22Processing node 3: node04

23Certificate was added to keystore

24Signature ok

25subject=/C=US/ST=MyState/L=MyCity/O=MyOrg/OU=MyDept/CN=node04

26Getting CA Private Key

27Certificate reply was installed in keystore

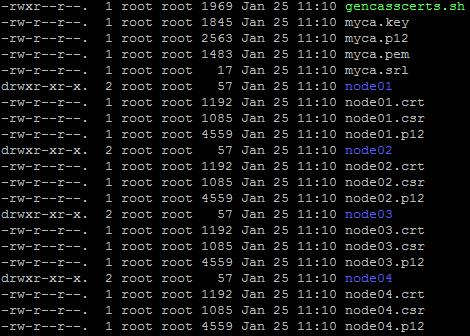

Looking at the directory from where the script was run you should see the certificate files:

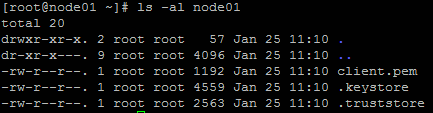

In each node directory there will be 3 files (.keystore, .truststore and chain.pem):

The .truststore files will all be identical between the node directories, the .keystore and client.pem files will be unique.

Next we need to move the generated certificate stores to an appropriate location, easiest way to do this is to use scp from the directory where the script was run. We'll place the files in the Cassandra configuration directory (/etc/cassandra/conf).

On the node where the files have been generated we can just copy them (node01 in our case):

# cp node01/.keystore node01/.truststore /etc/cassandra/conf

To copy the appropriate files to the other nodes we can use scp:

1scp node02/.keystore node02/.truststore root@10.0.0.102:/etc/cassandra/conf

2scp node03/.keystore node03/.truststore root@10.0.0.103:/etc/cassandra/conf

3scp node04/.keystore node04/.truststore root@10.0.0.104:/etc/cassandra/conf

On each node we now run the following to set appropriate permissions and ownership on the files:

1chown cassandra:cassandra /etc/cassandra/conf/.keystore /etc/cassandra/conf/.truststore

2chmod 400 /etc/cassandra/conf/.keystore /etc/cassandra/conf/.truststore`

We can now reconfigure the cassandra.yaml configuration file on each node to use our new certificates and enable encrypted communication.

The original settings from cassandra.yaml and the required changes are:

In the server_encryption_options: section to encrypt node-to-node communications:

| Original Value | New Value |

|---|---|

| internode_encryption: none | internode_encryption: all |

| keystore: conf/.keystore | keystore: <location of copied .keystore file> |

| keystore_password: cassandra | keystore_password: |

| truststore: conf/.truststore | truststore: <location of copied .truststore file> |

| truststore_password: cassandra | truststore_password: |

Optionally you can also change the cipher_suites: setting to restrict available ciphers to the more secure versions (e.g. cipher_suites: [TLS_RSA_WITH_AES_256_CBC_SHA]').

In the client_encryption_options: section to encrypt node-to-client communications:

| Original Value | New Value |

|---|---|

| enabled: false | enabled: true |

| keystore: conf/.keystore | keystore: <location of copied .keystore file> |

| keystore_password: cassandra | keystore_password: |

Again you can change the cipher_suites: setting if desired to use more secure ciphers.

Now we need to stop the cassandra service on ALL nodes:

service cassandra stop

Starting the cassandra service back up (service cassandra start - remember to wait between each node to give the cluster time to settle) you should now see the following in the /var/log/cassandra/system.log file:

INFO [main] 2018-01-25 20:52:38,290 MessagingService.java:541 - Starting Encrypted Messaging Service on SSL port 7001

Once the nodes are all back up and running nodetool status should show them all as status of 'UN' (Up/Normal).

If we attempt to use cqlsh to connect to the cluster now, we should get an error as we're not using an encrypted connection:

1cqlsh 10.0.0.101 -u cassandra -p cassandra

2

3Connection error: ('Unable to connect to any servers', {'10.0.0.101': ConnectionShutdown('Connection <AsyncoreConnection(24713424) 10.0.0.101:9042 (closed)> is already closed',)})

If we specify the '--ssl' flag to cqlsh, we still get an error as we haven't provided a client certificate for the connection:

1cqlsh 10.0.0.101 -u cassandra -p cassandra --ssl

2

3Validation is enabled; SSL transport factory requires a valid certfile to be specified. Please provide path to the certfile in [ssl] section as 'certfile' option in /root/.cassandra/cqlshrc (or use [certfiles] section) or set SSL_CERTFILE environment variable.

This is where the client.pem file is used as generated by the script, copy this file into the .cassandra folder in your user home path and then create/edit a file in this .cassandra folder called cqlshrc with the following content:

1[connection]

2factory = cqlshlib.ssl.ssl_transport_factory

3

4[ssl]

5certfile = ~/.cassandra/client.pem

6validate = false

Save the file and now we should be able to establish an encrypted session:

1cqlsh 10.0.0.101 -u cassandra -p cassandra --ssl

2

3Connected to My vCD Cluster at 10.0.0.101:9042.

4[cqlsh 5.0.1 | Cassandra 3.0.15 | CQL spec 3.4.0 | Native protocol v4]

5Use HELP for help.

6cassandra@cqlsh>

When configuring vCloud Director to use our new metrics cluster, we must first tell vCloud Director that it can trust the CA we've created for our Cassandra cluster by importing the public key of our CA (the myca.pem file generated by the script) into the vCloud Director cell server cacerts repository. Ludovic Rivallain has a great post written up at https://vuptime.io/2017/08/30/VMware-Patch-vCloudDirector-cacerts-file/ showing how to do this. Note that this must be performed on each vCloud Director cell server as the cacerts repository is not shared between them.

You should also add a new admin user to Cassandra with a complex password and disable the builtin 'cassandra' user account before using the cluster.

Finally, you can follow the VMware documentation (link) to configure your vCloud Director cell to use this Cassandra cluster for metrics storage.

It's reasonably easy to adjust this process to use an external CA rather than generating a new self-signing one, but this post is long enough already so let me know in the comments if you'd like to see this and I'll write up a separate post detailing the changes to do this.

As always, comments/corrections/feedback welcome.

Jon.